Getty Images

Getty ImagesInstagram is overhauling the way it works for teenagers, promising more “built-in protections” for young people and added controls and reassurance for parents.

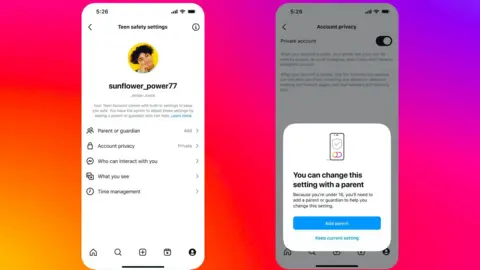

The new “teen accounts”, for children aged 13 to 15, will force many privacy settings to be on by default, rather than a child opting in.

Teenagers’ posts will also be set to private – making them unviewable to people who don’t follow them, and they must approve all new followers.

These settings can only changed by giving a parent or guardian oversight of the account, or when the child turns 16.

Social media companies are under pressure worldwide to make their platforms safer, with concerns that not enough is being done to shield young people from harmful content.

The NSPCC called the announcement a “step in the right direction” but said Instagram’s owner, Meta, appeared to “putting the emphasis on children and parents needing to keep themselves safe.”

Rani Govender, the NSPCC’s online child safety policy manager, said Meta and other social media companies needed to take more action themselves.

“This must be backed up by proactive measures that prevent harmful content and sexual abuse from proliferating Instagram in the first place, so all children have the benefit of comprehensive protections on the products they use,” she said.

Meta describes the changes as a “new experience for teens, guided by parents”, and says they will “better support parents, and give them peace of mind that their teens are safe with the right protections in place.”

However, media regulator Ofcom raised concerns in April over parents’ willingness to intervene to keep their children safe online.

In a talk last week, senior Meta executive Sir Nick Clegg said: “One of the things we do find… is that even when we build these controls, parents don’t use them.”

Ian Russell, whose daughter Molly viewed content about self-harm and suicide on Instagram before taking her life aged 14, told the BBC it was important to wait and see how the new policy was implemented.

“Whether it works or not we’ll only find out when the measures come into place,” he said.

“Meta is very good at drumming up PR and making these big announcements, but what they also have to be good at is being transparent and sharing how well their measures are working.”

How will it work?

Teen accounts will mostly change the way Instagram works for users between the ages of 13 and 15, with a number of settings turned on by default.

These include strict controls on sensitive content to prevent recommendations of potentially harmful material, and muted notifications overnight.

Accounts will also be set to private rather than public – meaning teenagers will have to actively accept new followers and their content cannot be viewed by people who don’t follow them.

Instagram

InstagramParents who choose to supervise their child’s account will be able to see who they message and the topics they have said they are interested in – though they will not be able to view the content of messages.

Instagram says it will begin moving millions of existing teen users into the new experience within 60 days of notifying them of the changes.

Age identification

The system will primarily rely on users being honest about their ages – though Instagram already has tools that seek to verify a user’s age if there are suspicions they are not telling the truth.

From January, in the US, it will also start using artificial intelligence (AI) tools to try and proactively detect teens using adult accounts, to put them back into a teen account.

The UK’s Online Safety Act, passed earlier this year, requires online platforms to take action to keep children safe, or face huge fines.

Ofcom warned social media sites in May they could be named and shamed – and banned for under-18s – if they fail to comply with new online safety rules.

Social media industry analyst Matt Navarra described the changes as significant – but said they hinged on enforcement.

“As we’ve seen with teens throughout history, in these sorts of scenarios, they will find a way around the blocks, if they can,” he told the BBC.

“So I think Instagram will need to ensure that safeguards can’t easily be bypassed by more tech-savvy teens.”

Questions for Meta

Instagram is by no means the first platform to introduce such tools for parents – and it already claims to have more than 50 tools aimed at keeping teens safe.

It introduced a family centre and supervision tools for parents in 2022 that allowed them to see the accounts their child follows and who follows them, among other features.

Snapchat also introduced its own family centre letting parents over the age of 25 see who their child is messaging and limit their ability to view certain content.

In early September YouTube said it would limit recommendations of certain health and fitness videos to teenagers, such as those which “idealise” certain body types.

Instagram already uses age verification technology to check the age of teens who try to change their age to over 18, through a video selfie.

This raises the question of why despite the large number of protections on Instagram, young people are still exposed to harmful content.

An Ofcom study earlier this year found that every single child it spoke to had seen violent material online, with Instagram, WhatsApp and Snapchat being the most frequently named services they found it on.

While they are also among the biggest, it’s a clear indication of a problem that has not yet been solved.

Under the Online Safety Act, platforms will have to show they are committed to removing illegal content, including child sexual abuse material (CSAM) or content that promotes suicide or self-harm.

But the rules are not expected to fully take effect until 2025.

In Australia, Prime Minister Anthony Albanese recently announced plans to ban social media for children by bringing in a new age limit for kids to use platforms.

Instagram’s latest tools put control more firmly in the hands of parents, who will now take even more direct responsibility for deciding whether to allow their child more freedom on Instagram, and supervising their activity and interactions.

They will of course also need to have their own Instagram account.

But ultimately, parents do not run Instagram itself and cannot control the algorithms which push content towards their children, or what is shared by its billions of users around the world.

Social media expert Paolo Pescatore said it was an “important step in safeguarding children’s access to the world of social media and fake news.”

“The smartphone has opened up to a world of disinformation, inappropriate content fuelling a change in behaviour among children,” he said.

“More needs to be done to improve children’s digital wellbeing and it starts by giving control back to parents.”