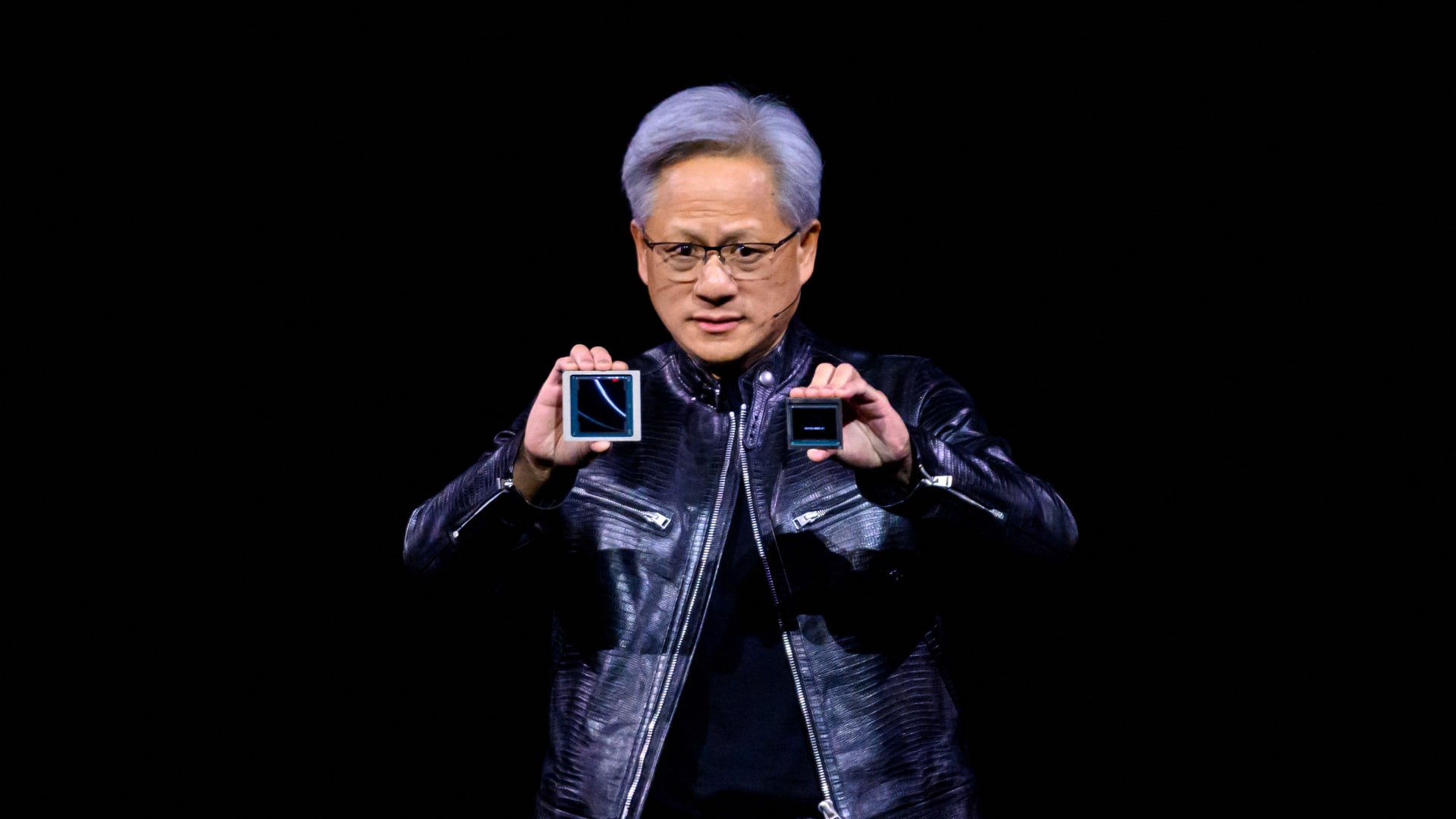

In the wake of Nvidia ‘s launch of powerful new artificial intelligence chips, Goldman Sachs is predicting major growth for memory chips used in AI systems. Called Blackwell, the new GPU chips from Nvidia that power AI models require the latest third-generation memory chips, also known as high-bandwidth memory (HBM/HBM3E). The investment bank expects the total addressable market for HBM to expand tenfold to $23 billion by 2026, up from just $2.3 billion in 2022. The Wall Street bank sees three major memory makers as prime beneficiaries of the booming HBM market: SK Hynix , Samsung Electronics and Micron . All three stocks are also traded in the U.S., Germany, and U.K. Investors can also invest in the three stocks through exchange-traded funds. While Invesco Next Gen Connectivity ETF (KNCT) holds all three stocks in a highly concentrated manner, WisdomTree Artificial Intelligence and Innovation Fund (WTAI) have less than 2% allocation toward each. MU 1Y line Goldman Sachs said stronger AI demand was driving higher AI server shipment and greater memory chip density per GPU – the chip powering AI – leading them to “meaningfully raise” their estimates. Goldman analysts led by Giuni Lee said in a note to clients on March 22 that all three “will benefit from the strong growth in the HBM market and the tight [supply/demand], as this is leading to a continued substantial HBM pricing premium and likely accretion to each company’s overall DRAM margin.” Investors have been cautious about the memory market for AI systems since all three major suppliers are due to expand manufacturing capacity and add downward pressure on profit margins. However, Goldman analysts believe challenges like larger chip sizes and lower production yields for HBM compared with conventional DRAM memory chips are likely to keep supply tight in the near future. The Wall Street bank is not alone in its view. Citi analysts had also advised clients similarly in February. “Despite market concerns on potential HBM oversupply as all three DRAM makers enter the HBM3E space, we see sustained supply tightness in HBM3E space given demand growth from Nvidia and other AI clients amid limited supply growth on low yield and increased memory fabrication complexity,” said Citi analyst Peter Lee in a note to clients on Feb. 27. Goldman also cited suppliers as saying “that their HBM capacity for 2024 is fully booked, while 2025 supply is already being allocated to customers.” However, the investment bank expects SK Hynix to maintain over 50% market share for at least the next few years, thanks to its “strong customer/supply chain relationship” and its technology, which is believed to have “better productivity and yield compared to its peers’ solutions.” The Goldman analysts also said Samsung Electronics has “the opportunity to gain market share over the medium term.” Earlier this month, Nvidia CEO Jensen Huang hinted during a media briefing that his firm is in the process of qualifying Samsung Electronics’ latest HBM3E chips for its graphics processing units . Meanwhile, Micron could start outgrowing rivals in 2025 by narrowing its focus on the HBM3E standard, according to the investment bank. — CNBC’s Michael Bloom contributed to this report.