Artificial intelligence pioneer Geoffrey Hinton speaks at the Thomson Reuters Financial and Risk Summit in Toronto, December 4, 2017.

Mark Blinch | Reuters

Geoffrey Hinton, known as “The Godfather of AI,” received his Ph.D. in artificial intelligence 45 years ago and has remained one of the most respected voices in the field.

For the past decade Hinton worked part-time at Google, between the company’s Silicon Valley headquarters and Toronto. But he has quit the internet giant, and he told The New York Times that he’ll be warning the world about the potential threat of AI, which he said is coming sooner than he previously thought.

“I thought it was 30 to 50 years or even longer away,” Hinton told the Times, in a story published Monday. “Obviously, I no longer think that.”

Hinton, who was named a 2018 Turing Award winner for conceptual and engineering breakthroughs, said he now has some regrets over his life’s work, the Times reported. He cited the near-term risks of AI taking jobs, and the proliferation of fake photos, videos and text that appear real to the average person.

In a statement to CNBC, Hinton said, “I now think the digital intelligences we are creating are very different from biological intelligences.”

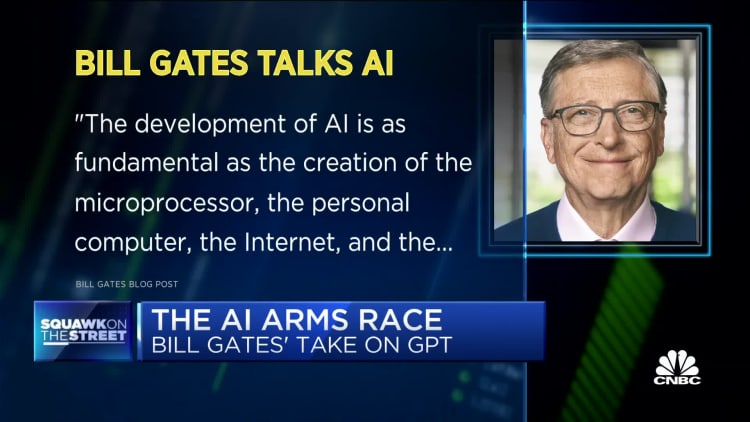

Hinton referenced the power of GPT-4, the most-advanced large language model, or LLM, from startup OpenAI, whose technology has gone viral since the chatbot ChatGPT was launched late last year. Here’s how he described what’s happening now:

“If I have 1,000 digital agents who are all exact clones with identical weights, whenever one agent learns how to do something, all of them immediately know it because they share weights,” Hinton told CNBC. “Biological agents cannot do this. So collections of identical digital agents can acquire hugely more knowledge than any individual biological agent. That is why GPT-4 knows hugely more than any one person.”

Hinton was sounding the alarm even before leaving Google. In an interview with CBS News that aired in March, Hinton was asked what he thinks the “chances are of AI just wiping out humanity.” He responded, “It’s not inconceivable. That’s all I’ll say.”

Google CEO Sundar Pichai has also publicly warned of the risks of AI. He told “60 Minutes” last month that society isn’t prepared for what’s coming. At the same time, Google is showing off its own products, such as self-learning robots and Bard, its ChatGPT competitor.

But when asked if “the pace of change can outstrip our ability to adapt,” Pichai downplayed the risk. “I don’t think so. We’re sort of an infinitely adaptable species,” he said.

Over the past year, Hinton has reduced his time at Google, according to an internal document viewed by CNBC. In March 2022, he moved to 20% of full-time. Later in the year he was assigned to a new team within Brain Research. His most recent role was vice president and engineering fellow, reporting to Jeff Dean within Google Brain.

In an emailed statement to CNBC, Dean said he appreciated Hinton for “his decade of contributions at Google.”

“I’ll miss him, and I wish him well!” Dean wrote. “As one of the first companies to publish AI Principles, we remain committed to a responsible approach to AI. We’re continually learning to understand emerging risks while also innovating boldly.”

Hinton’s departure is a high-profile loss for Google Brain, the team behind much of the company’s work in AI. Several years ago, Google reportedly spent $44 million to acquire a company started by Hinton and two of his students in 2012.

His research group made major breakthroughs in deep learning that accelerated speech recognition and object classification. Their technology would help form new ways of using AI, including ChatGPT and Bard.

Google has rallied teams across the company to integrate Bard’s technology and LLMs into more products and services. Last month, the company said it would be merging Brain with DeepMind to “significantly accelerate our progress in AI.”

According to the Times, Hinton said he quit his job at Google so he could freely speak out about the risks of AI. He told the paper, “I console myself with the normal excuse: If I hadn’t done it, somebody else would have.”

Hinton tweeted on Monday, “I left so that I could talk about the dangers of AI without considering how this impacts Google. Google has acted very responsibly.”