[ad_1]

Bumble can only protect you from dick pics on its own apps. But now its image-detecting AI, known as Private Detector, can give every app the power to shut down cyberflashers for you.

First released in 2019 exclusively across Bumble’s apps, Private Detector automatically blurs out inappropriate images and gives the you a heads-up about potential incoming lewdness. This gives you the chance to view, block or even report the image. On Monday, Bumble released a revved-up version of Private Detector into the wilds of the internet, offering the tool free of charge to app makers everywhere through an open-source repository.

Read more: Bumble will use AI to protect you from unwanted dick pics

Private Detector achieved greater than 98% accuracy during both offline and online tests, the company said, and the latest iteration has been geared for efficiency and flexibility so a wider range of developers can use it. Private Detector’s open-source package includes not just the source code for developers, but also a ready-to-use model that can be deployed as-is, extensive documentation and a white paper on the project.

“Safety is at the heart of everything we do and we want to use our product and technology to help make the internet a safer place for women,” Rachel Hass, Bumble’s vice president of member safety, said in an email. “Open-sourcing this feature is about remaining firm in our conviction that everyone deserves healthy and equitable relationships, respectful interactions, and kind connections online.”

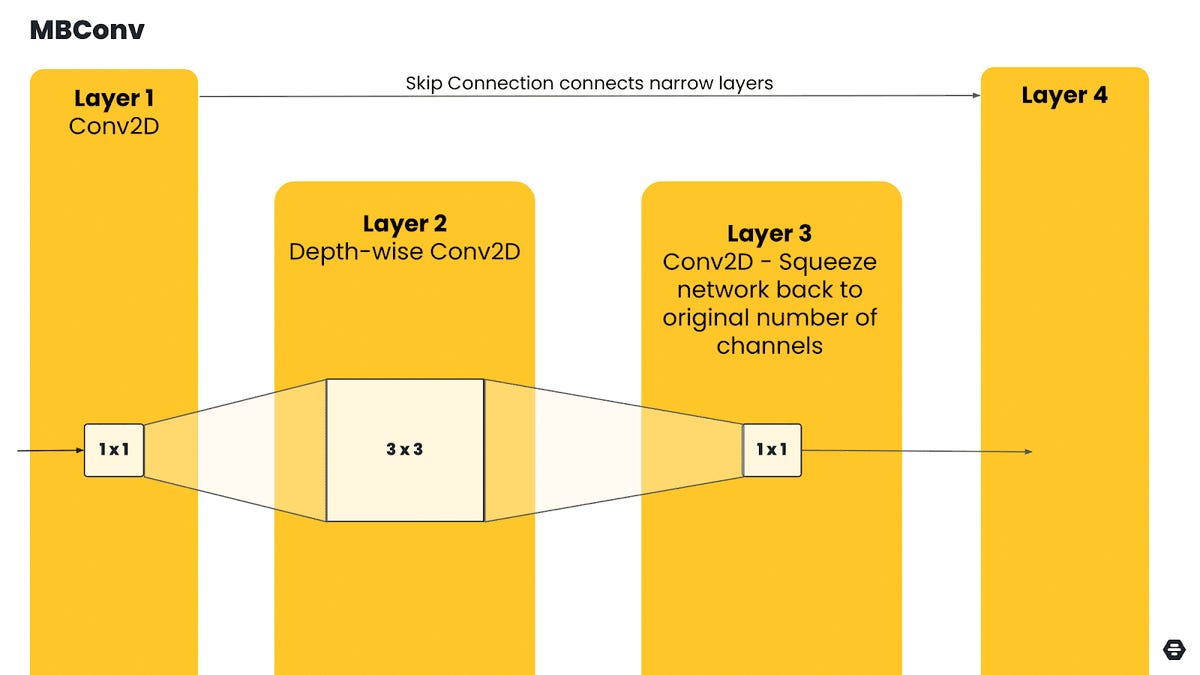

Bumble said the company’s decade of machine learning legwork has allowed it to create flexible, new architecture for its Private Detector neural network that is both faster and more accurate than its 2019 iteration. New to Private Detector is an EfficientNetv2-based binary classifier that boosts the detector’s training speed and efficiency, while it works in tandem with the tool’s other layers for faster overall execution.

Bumble

The Private Detector AI model isn’t Bumble’s only strategy to fight online sexual harassment and cyberflashing. The company has repeatedly lobbied for legislation aimed to stymie the world’s least desirable camera-users. In a 2018 survey, Bumble found that one in three women on Bumble received unsolicited lewd photos from someone and that 96% were not happy to see those pictures. Since then, Bumble has successfully lobbied to get anti-cyberflashing laws on the books in both Texas and Virginia and is currently pushing similar measures in four other states.

“Bumble was one of the first apps to address cyberflashing by giving the power to our community to consensually decide if they would like to see certain photos and creating a safety standard if not. We’ve been working to address cyberflashing and to help create more online accountability for years but this issue is bigger than just one company,” said Bumble public policy head Payton Iheme.

“We cannot do this alone.”

And the company may not have to. In offering Private Detector for free, Bumble may have just summoned a swarm of support to its cause.

[ad_2]