This tale is a part of WWDC 2022, CNET’s entire protection from and about Apple’s annual builders convention.

What is taking place

Apple’s introduced a brand new Protection Take a look at characteristic to assist attainable sufferers in abusive relationships.

Why it issues

That is the newest instance of the tech trade taking up difficult non-public expertise problems that wouldn’t have transparent or simple solutions.

What is subsequent

Apple is speaking with victim-survivor advocacy organizations to spot different options that may assist other people in disaster.

Some of the long-requested and standard new options Apple plans to carry to the iPhone this autumn, like undo ship for iMessage texts and emails in addition to a serve as to search out and take away reproduction footage, is one that would imply existence or dying when it is used.

On Monday, Apple introduced Protection Take a look at, a brand new characteristic throughout the settings of an iPhone or iPad, designed to help home violence sufferers. The surroundings, coming this autumn with iOS 16, is supposed to assist any individual briefly lower ties with a possible abuser. Protection Take a look at does this both via serving to an individual briefly see with whom they are mechanically sharing knowledge like their location or footage, or via disabling get admission to and knowledge sharing to each software as opposed to the only of their fingers.

Significantly, the app additionally features a distinguished button on the most sensible proper of the display screen, categorized Fast Go out. Because the title implies, it is designed to assist a possible sufferer briefly conceal that they would been taking a look at Protection Take a look at, in case their abuser does not permit them privateness. If the abuser reopens the settings app, the place Protection Take a look at is stored, it’s going to get started on the default basic settings web page, successfully masking up the sufferer’s tracks.

“Many of us percentage passwords and get admission to to their units with a spouse,” Katie Skinner, a privateness engineering supervisor at Apple, stated on the corporate’s WWDC match Monday. “Then again, in abusive relationships, this will threaten non-public protection and make it more difficult for sufferers to get assist.”

Protection Take a look at, and the cautious approach by which it was once coded, are a part of a bigger effort amongst tech firms to prevent their merchandise from getting used as equipment of abuse. It is also the newest signal of Apple’s willingness to wade into development expertise to take on delicate subjects. And despite the fact that the corporate says it is earnest in its method, it is drawn complaint for a few of its strikes. Ultimate 12 months, the corporate introduced efforts to locate kid exploitation imagery on a few of its telephones, capsules and computer systems, a transfer that critics frightened may just erode Apple’s dedication to privateness.

Nonetheless, sufferer advocates say Apple’s one of the most few massive firms publicly running on those problems. Whilst many tech giants together with Microsoft, Fb, Twitter and Google have constructed and applied methods designed to locate abusive imagery and behaviour on their respective websites, they have got struggled to construct equipment that prevent abuse as it is taking place.

Sadly, the abuse has gotten worse. A survey of practitioners who paintings on home violence carried out in November 2020 discovered that 99.3% had purchasers who had skilled “technology-facilitated stalking and abuse,” in keeping with the Ladies’s Services and products Community, which labored at the file with Curtin College in Australia. Additionally, the organizations realized that studies of GPS monitoring of sufferers had jumped greater than 244% since they remaining carried out the survey in 2015.

Amid all this, tech firms like Apple have more and more labored running with sufferer organizations to know how their equipment may also be each misused via a wrongdoer and useful to a possible sufferer. The outcome are options, like Protection Take a look at’s Fast Go out button, that advocates say are an indication Apple’s development those options in what they name a “trauma-informed” approach.

“Most of the people can’t recognize the sense of urgency” many sufferers have, stated Renee Williams, government director of the Nationwide Middle for Sufferers of Crime. “Apple’s been very receptive.”

Apple says there are greater than a thousand million iPhones getting used around the globe.

Apple/Screenshot via CNET

Difficult problems

One of the tech trade’s greatest wins have come from figuring out abusers. In 2009, Microsoft helped create symbol reputation instrument known as PhotoDNA, which is now utilized by social networks and internet sites around the globe to determine kid abuse imagery when it is uploaded to the web. Identical methods have since been constructed to assist determine identified terrorist recruitment movies, livestreams of mass shootings and different issues that enormous tech firms attempt to stay off their platforms.

As tech has change into extra pervasive in our lives, those efforts have taken on greater significance. And in contrast to including a brand new video expertise or expanding a pc’s efficiency, those social problems do not all the time have transparent solutions.

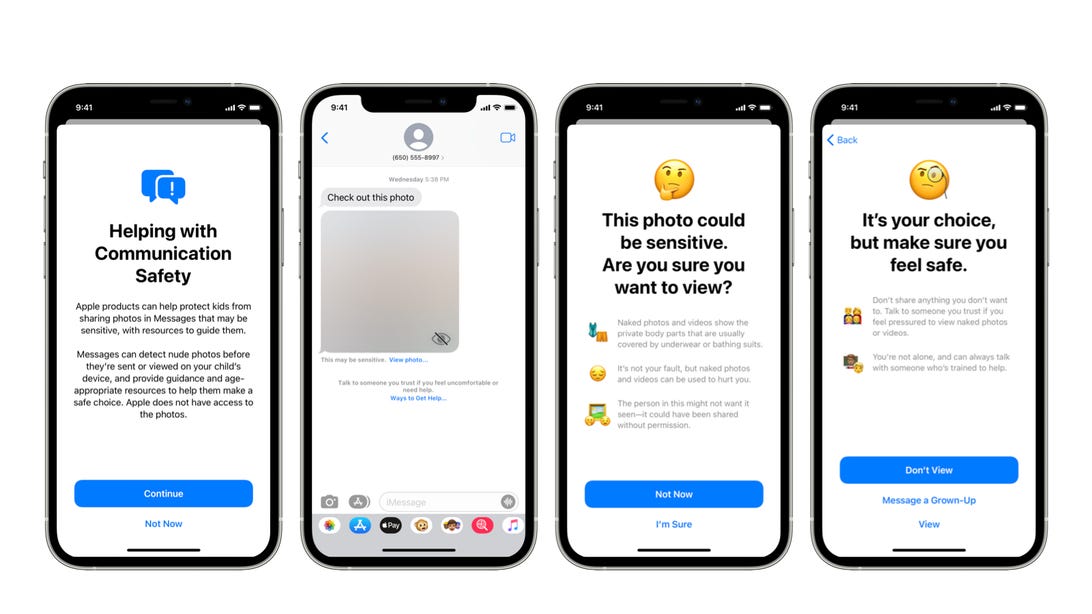

In 2021, Apple made certainly one of its first public strikes into victim-focused expertise when it introduced new options for its iMessage carrier designed to research messages despatched to customers marked as youngsters to resolve if their attachments contained nudity. If its gadget suspected a picture, it might blur the attachment and warn the individual receiving it to ensure they would sought after to look it. Apple’s carrier would additionally level youngsters to assets that would assist them if they are being victimized in the course of the carrier.

On the time, Apple stated it constructed the message-scanning expertise with privateness in thoughts. However activists frightened Apple’s gadget was once additionally designed to alert an recognized father or mother if their kid selected to view the suspected connected symbol anyway. That, some critics stated, may just incite abuse from a probably unhealthy father or mother.

Apple’s further efforts to locate attainable kid abuse photographs that may well be synchronized to its picture carrier via iPhones, iPads and Mac computer systems was once criticized via safety professionals who frightened it may well be misused.

Nonetheless, sufferer advocates said that Apple was once one of the most few software firms running on equipment supposed to toughen sufferers of attainable abuse as it is taking place. Microsoft and Google did not reply to requests for remark about whether or not they plan to introduce options similar to Protection Take a look at to assist sufferers who may well be the use of Home windows and Xbox instrument for PCs and online game consoles, or Android cell instrument for telephones and capsules.

Apple offered a gadget for kid protection in iMessages remaining 12 months.

Apple

Finding out, however a lot to do

The tech trade has been running with sufferers organizations for over a decade, searching for techniques to undertake protection mindsets inside their merchandise. Advocates say that previously few years particularly, many protection groups have grown throughout the tech giants, staffed in some instances with other people from the nonprofit international who labored at the problems the tech trade was once taking up.

Apple began consulting with some sufferers rights advocates about Protection Take a look at remaining 12 months, requesting enter and concepts for the way to absolute best construct the gadget.

“We’re beginning to see reputation that there’s a company or social accountability to verify your apps cannot be too merely misused,” Karen Bentley, CEO of Wesnet. And she or he stated that is in particular difficult as a result of, as expertise has developed to change into more uncomplicated to make use of, so has the opportunity of it to be a device of abuse.

That is a part of why she says Apple’s Protection Take a look at is “sensible,” as a result of it could actually briefly and simply separate any individual’s virtual knowledge and communications from their abuser. “In case you are experiencing home violence you are prone to be experiencing a few of that violence in expertise,” she stated.

Even though Protection Take a look at has moved from an concept into take a look at instrument and will probably be made extensively to be had with the iOS 16 suite of instrument updates for iPhones and iPads within the fall, Apple stated it plans extra paintings on those problems.

Sadly, Protection Take a look at does not lengthen to techniques abusers may well be monitoring other people the use of units they do not personal — such as though any individual slips certainly one of Apple’s $29 AirTag trackers into their coat pocket or onto their automobile to stalk them. Protection Take a look at additionally is not designed for telephones arrange below kid accounts, for other people below the age of 13, despite the fact that the characteristic’s nonetheless in checking out and may just exchange.

“Sadly, abusers are power and are continuously updating their techniques,” stated Erica Olsen, venture director for Protection Web, a program from the Nationwide Community to Finish Home Violence that trains firms, neighborhood teams and governments on the way to make stronger sufferer protection and privateness. “There’ll all the time be extra to do on this house.”

Apple stated it is increasing coaching with its workers who have interaction with consumers, together with gross sales other people in its retail outlets, to understand how options like Protection Take a look at paintings and be capable to train it when suitable. The corporate has additionally created pointers for its toughen body of workers to assist determine and assist attainable sufferers.

In a single example, as an example, AppleCare groups are being taught to concentrate for when an iPhone proprietor calls expressing worry that they do not have keep an eye on over their very own software or their very own iCloud account. In every other, AppleCare can information any individual on how to take away their Apple ID from a circle of relatives team.

Apple additionally up to date its non-public protection person information in January to instruct other people the way to reset and regain keep an eye on of an iCloud account that may well be compromised or getting used as a device for abuse.

Craig Federighi, Apple’s head of instrument engineering, stated the corporate will proceed increasing its non-public security measures as a part of its greater dedication to its consumers. “Protective you and your privateness is, and can all the time be, the middle of what we do,” he stated.